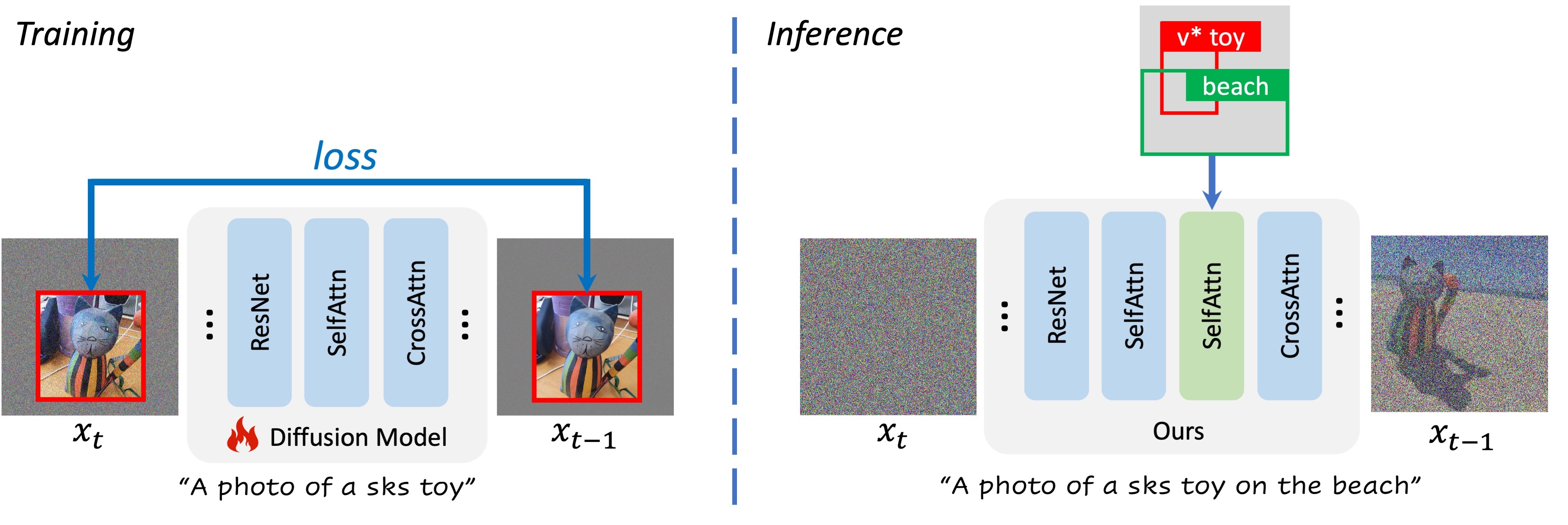

Text-to-image diffusion models have attracted considerable interest due to their wide applicability across diverse fields. However, challenges persist in creating controllable models for personalized object generation. In this paper, we first identify the entanglement issues in existing personalized generative models, and then propose a straightforward and efficient data augmentation training strategy that guides the diffusion model to focus solely on object identity. By inserting the plug-and-play adapter layers from a pre-trained controllable diffusion model, our model obtains the ability to control the location and size of each generated personalized object. During inference, we propose a regionally-guided sampling technique to maintain the quality and fidelity of the generated images. Our method achieves comparable or superior fidelity for personalized objects, yielding a robust, versatile, and controllable text-to-image diffusion model that is capable of generating realistic and personalized images. Our approach demonstrates significant potential for various applications, such as those in art, entertainment, and advertising design.

PACGen offers the flexibility to effortlessly place your unique object at any desired location. The four distinct color-coded boxes on the left correspond to four resulting images on the right.

PACGen is designed to accommodate multiple objects location control, including both user-provided unique objects and common objects. This capability demonstrates its remarkable potential for addressing image composition challenges..

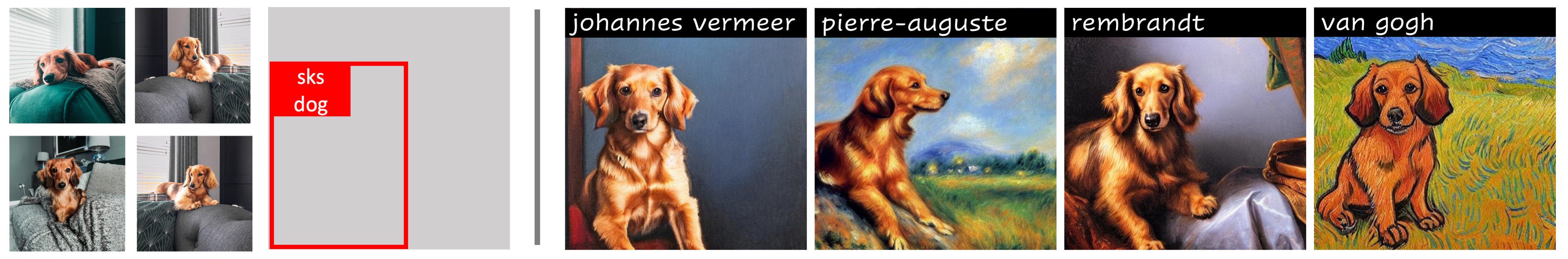

With PACGen, you can effortlessly incorporate your favorite objects and pets into any location and adapt a variety of art styles to create personalized, aesthetically appealing images..

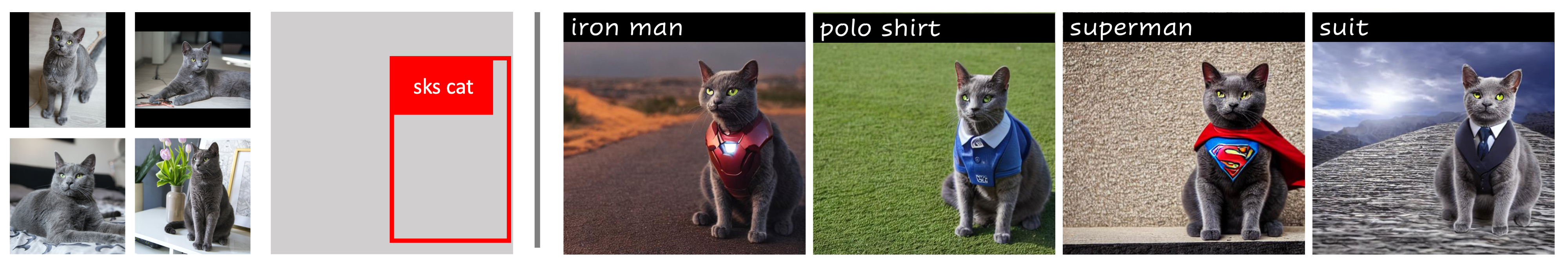

PACGen not only grants you full control over your object's location but also offers the ability to add various accessories or outfits, enabling endless customization possibilities..

Additionally, PACGen allows you to modify object attributes, such as color, while still maintaining the control over object location, enhancing the overall customization experience.

We also showcase how PACGen enables you to create a hybrid between your object and other common objects, sparking your imagination and inspiring unique designs..

We have strict terms and conditions for using the model checkpoints and the demo; it is restricted to uses that follow the license agreement of Latent Diffusion Model and Stable Diffusion.

@article{li2023pacgen,

author = {Li, Yuheng and Liu, Haotian and Wen, Yangming and Lee, Yong Jae},

title = {Generate Anything Anywhere in Any Scene},

publisher = {arXiv:2306.17154},

year = {2023},

}

This website is adapted from GLIGEN , licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.